Crafting a Comprehensive Scenario Library

.jpg)

.jpg)

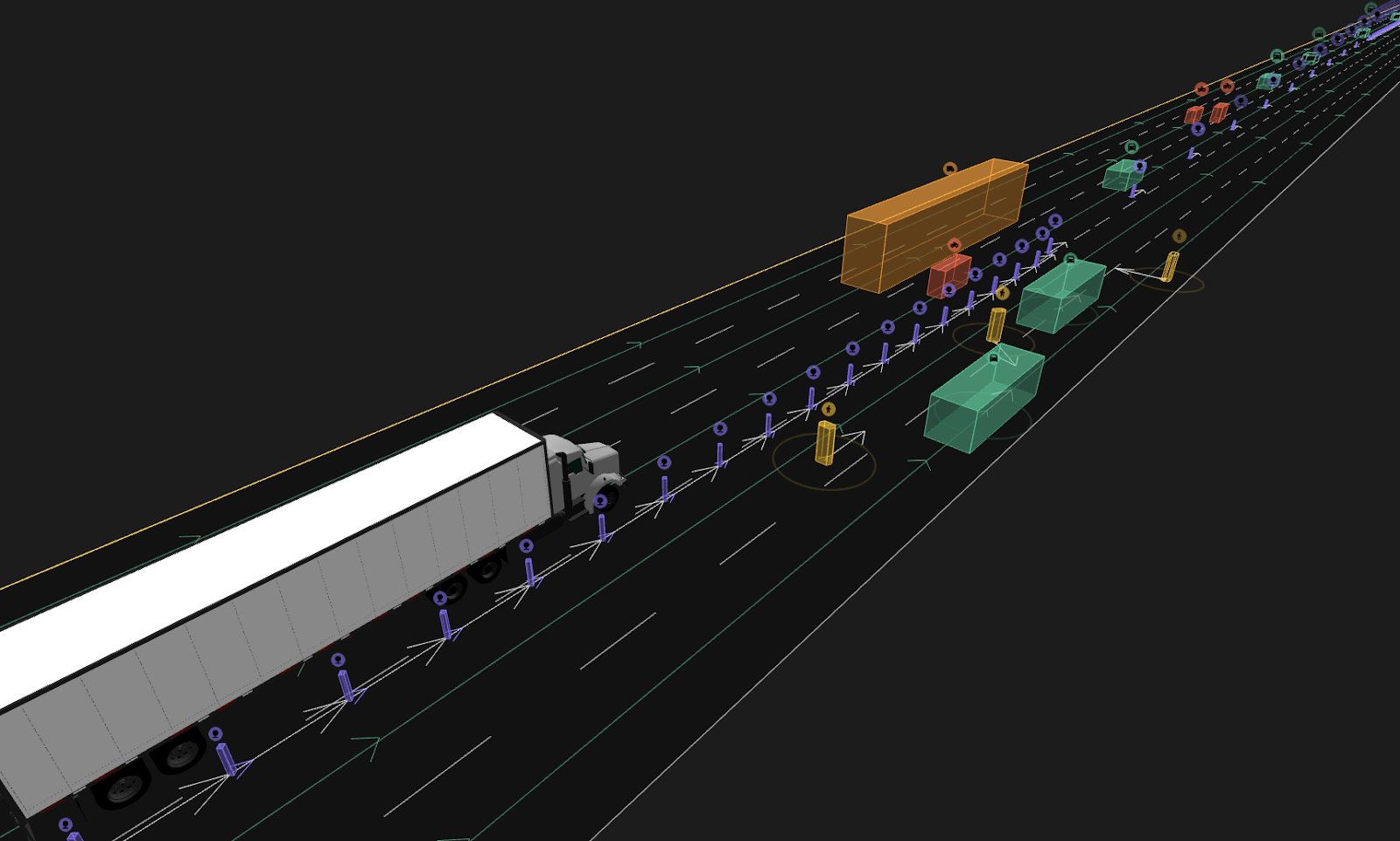

In the ever-evolving landscape of autonomous driving, comprehensive scenario libraries are a pivotal element for ensuring the safety and efficacy of advanced driver-assistance systems (ADAS) and autonomous driving (AD) technologies. Simulated scenario tests are well established as a highly cost-effective method for quickly discovering issues in the development of ADAS and AD systems. By enabling rigorous testing of autonomous systems' components, scenario libraries facilitate rapid evaluation that is both cost effective and low in computational demand. This blog post explores the nuanced process of building a scenario library that meets the rigorous demands of verifying and validating system requirements while also extending to cover all observed failure modes from other test methods.

Requiring a set of scenario tests to pass on every code commit allows an organization to prevent regressions, ensuring that key performance metrics in critical driving situations are consistently met or exceeded. In parallel, hypothetical scenarios not yet observed in real-world testing can be explored through fully synthetic simulated scenario tests, bypassing the cost and safety risks associated with testing in the real world.

But creating a sufficiently comprehensive library of scenarios is not straightforward. The set of possible driving situations that can occur in the real world is nearly unbounded, and it’s often not intuitive how to predict which situations represent the highest risk for the autonomous system.

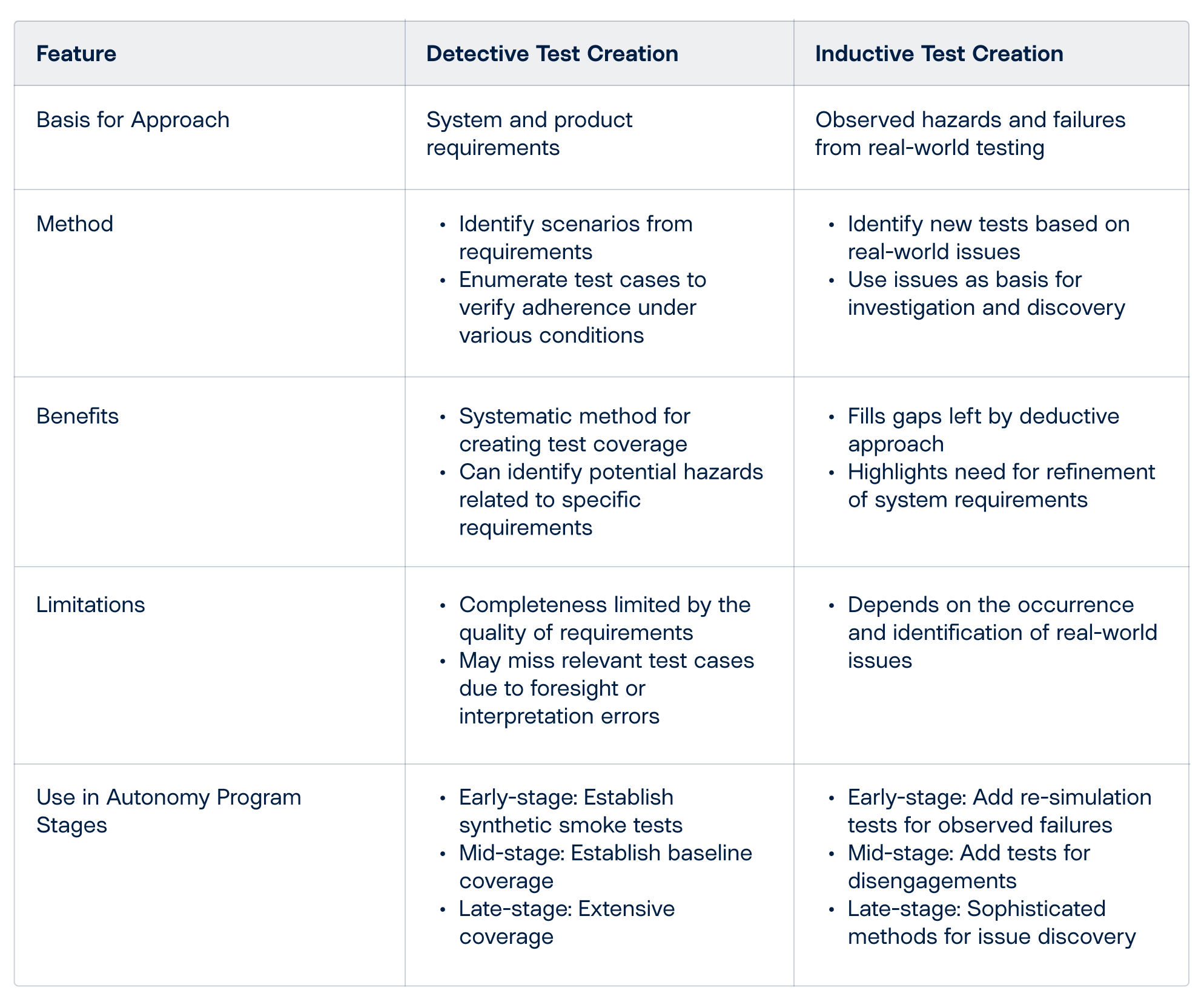

It can be helpful to start with a simple high-level framework for systematic scenario test coverage, which is based on the method for identification of needed scenarios. Autonomy programs can use two approaches in parallel to build out a comprehensive scenario library: The deductive approach and the inductive approach, compared in the table below.

The deductive approach identifies scenarios directly from the system and product requirements. Starting with a requirement, teams can enumerate test cases that verify the correct adherence to the requirement under a range of applicable conditions. Potential hazards related to specific requirements can be identified as well. This is a systematic method for creating test coverage.

One tradeoff, however, is that it can be only as complete as the requirements used as the basis for the test plans. In addition, relevant test cases can be missed due to insufficient foresight or errors in interpreting the underlying requirements.

The inductive approach helps fill the remaining gaps from the deductive approach, and involves identifying observed hazards and failures from real-world testing. These issues are used either directly as new scenario tests, or indirectly as the basis for further investigation and discovery of failures. These identified failures can highlight the need for further refinement of the system requirements, and additional corresponding scenario test coverage from the deductive approach.

It is important to extrapolate what the potential effects of real-world issues could be because in the real world issues are rarely the most severe, worst-case possibilities. For example, an issue in the real world that led to a driver braking uncomfortably but safely could have the potential to be much more dangerous if a different set of conditions were present.

The two approaches complement each other and can be used at any stage of an autonomy program:

One of the key benefits of simulated scenario testing is automating evaluation—a human does not need to be in the loop to classify the performance of the system. On one level, simulation tests can simply report a single pass/fail status for the test evaluation. At the same time, they provide a wealth of tracking information in the form of low-level data about the full simulation state over the course of the simulation run. Querying and analyzing this data in aggregate identifies trends in the development of the software under test.

Teams should implement targeted evaluation criteria based on the performance of the ego vehicle (including safety, comfort, efficiency, legality, etc.), but also for the validity of the test itself. It is important that the scenario continues to evaluate the intended interaction that was set up at the time of test creation.

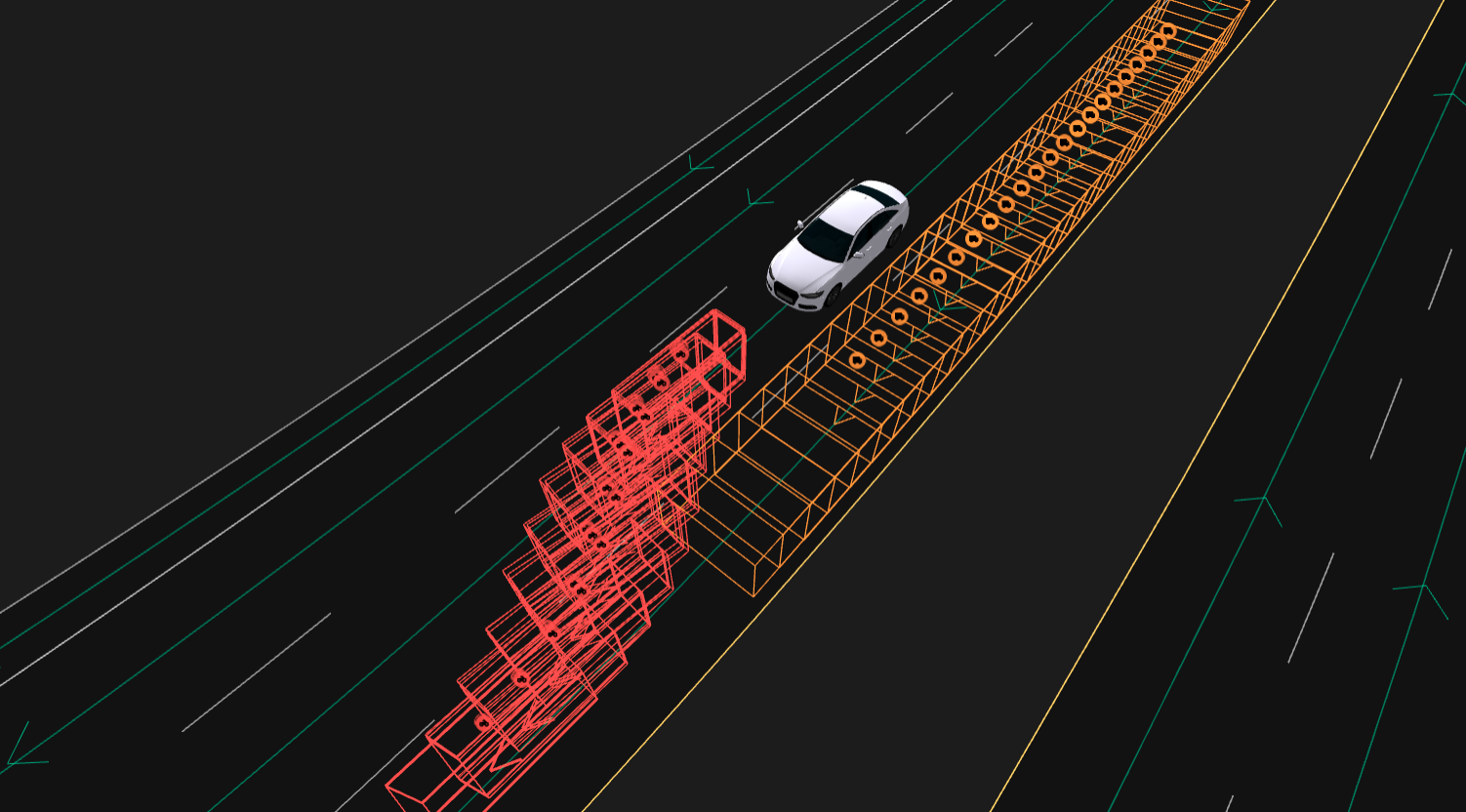

Scenario health refers to the maintained viability of the scenario test. Organizations should ensure that variance in the autonomous system’s behavior over time does not result in scenario tests becoming stale.

For example, a cut-in scenario that was created months or years ago may no longer be effective in testing a cut-in interaction today if the ego’s behavior has changed substantially. To safeguard against staleness, use dynamic simulation elements like triggered conditions or reactive behaviors, which will help maintain general applicability to a range of possible behaviors from the ego.

Establishing review pipelines organizationally can catch stale scenarios so they can be adjusted and quickly returned to the scenario library used for regression testing. Regularly reviewing important scenario sets comprehensively may be necessary, especially after large changes to the autonomous system behavioral software or underlying map data.

Organizations will naturally want to understand quantitatively to what extent their scenario libraries have “covered” their target deployment ODD. At a high level, this process begins with a formalized definition of the ODD, and then involves measuring how much of it is tested by the scenario library. Teams can also measure the arrival rate of new “surprises,” which are real-world events or unforeseen novel failures.

For more on measuring domain coverage on ODDs, see Applied Intuition’s blog post Using Domain Coverage to Measure an Automated Driving System’s Performance in a Given ODD.

At Applied Intuition, we offer Test Suites for a wide range of common domains, including highway, urban, and parking. We design these scenario libraries around common industry requirements for ADAS or AD systems. These libraries are ready to run out of the box with extensive parameterization, and traceability to requirements and to ODD taxonomy, and can run at the object and sensor level, can be edited directly, and can be re-targeted to other maps given map-agnostic specification. Test Suites are delivered on synthetic maps with corresponding 3D worlds for sensor simulation, and can be easily moved or copied to new locations. We can also customize scenario creation for nonstandard system requirements. Other tools worth checking out are Data Explorer, which can be used to identify log events where scenario creation is merited, and Cloud Engine, to scale and orchestrate execution of scenario tests.

Access sample scenarios for free here, and contact our engineering team to learn more.